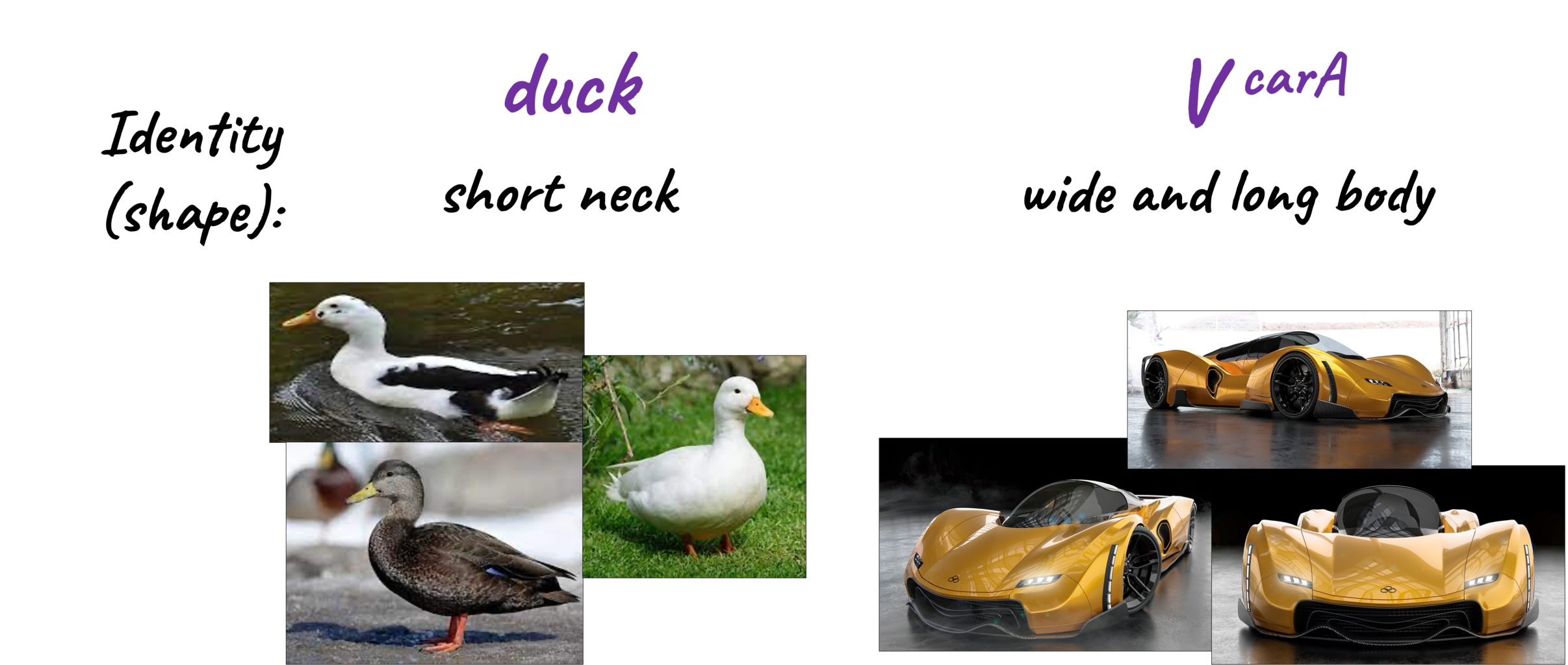

VideoSwap: Customized Video Subject Swapping with

Interactive Semantic Point Correspondence

Supplementary Material

Please refresh the webpage if the content has not loaded.

- User-Point Interaction Demo Video

- Qualitative Results of VideoSwap

- Qualitative Comparison

- Ablation Studies

User-Point Interaction Demo Video

We define the following two types of user-point interaction on the keyframe:

- The user defines semantic points at a specific keyframe.

- The user selects or drags the semantic point at the keyframe.

The accompanying demo video illustrates how users interact with semantic points to edit the video.

Qualitative Results of VideoSwap

1. Animal Swap

|

|---|

Please scroll right for more results.

| Source Video: "A kitten turning its head on a wooden floor." | kitten -> V catA | kitten -> V dogA | kitten -> V dogB | kitten -> panda | kitten -> monkey |

|---|---|---|---|---|---|

| Source Video: "A cat walking on a piano keyboard." | cat -> V catA | cat -> V dogA | cat -> V dogB | cat -> panda | cat -> monkey |

| Source Video: "A black swan swimming in a pond." | black swan -> V catA | black swan -> V dogA | black swan -> V dogB | black swan -> duck | black swan -> seal |

| Source Video: "A monkey sitting on the ground eating something." | monkey -> V catA | monkey -> teddy bear | monkey -> tiger | monkey -> cow | monkey -> wolf |

| Source Video: "An elk standing and turning its head in a field." | elk -> V dogB | elk -> tiger | elk -> cow | elk -> lion | elk -> pig |

| Source Video: "A dog sitting on the side of a car window looking out the window." | dog -> V catA | dog -> V dogA | dog -> V dogB | dog -> wolf | dog -> teddy bear |

Please scroll right for more results.

2. Object Swap

|

|---|

| Source Video: "A silver jeep driving down a curvy road in the countryside." | silver jeep -> V carA | silver jeep -> V porsche | silver jeep -> truck |

|---|---|---|---|

| Source Video: "A car driving down a road with wind turbine and grass." | car -> V carA | car -> V porsche | car -> van |

| Source Video: "An airplane flying above the clouds in the sky." | airplane -> V jet | airplane -> helicopter | airplane -> balloon |

| Source Video: "An airplane flying above the clouds in the sky." | airplane -> V jet | airplane -> helicopter | airplane -> UFO |

| Source Video: "A boat is traveling through the water near a rocky shore." | boat -> V yacht | boat -> V sailboat | boat -> canoe |

| Source Video: "A boat is traveling through the sea." | boat -> V yacht | boat -> V sailboat | boat -> canoe |

Qualitative Comparison

1. Compare to Previous Video-Editing Methods

We compare VideoSwap with following video-editing methods:

- Dense Correpondence: Rerender-A-Video [1], TokenFlow [2], Text2Video-Zero [3], StableVideo [4]

- Implicit Motion Encoding: Tune-A-Video [5], FateZero [6]

We utilize pre-defined concepts in the pretrained model and retrieve several images for shape reference. In comparison with previous methods, VideoSwap can reveal the correct shape of a given concept while aligning the motion of the source video.

|

|---|

Please scroll right for more comparisons.

| Source Video | VideoSwap (Ours) | Rerender-A-Video | TokenFlow | Text2Video-Zero | StableVideo | Tune-A-Video | FateZero |

|---|---|---|---|---|---|---|---|

| Source Prompt: "An airplane flying above the clouds in the sky." | airplane -> helicopter | airplane -> helicopter | airplane -> helicopter | airplane -> helicopter | airplane -> helicopter | airplane -> helicopter | airplane -> helicopter |

| Source Prompt: "A black swan swimming in a pond." | black swan -> duck | black swan -> duck | black swan -> duck | black swan -> duck | black swan -> duck | black swan -> duck | black swan -> duck |

| Source Prompt: "A silver jeep driving down a curvy road in the countryside." | silver jeep -> convertible | silver jeep -> convertible | silver jeep -> convertible | silver jeep -> convertible | silver jeep -> convertible | silver jeep -> convertible | silver jeep -> convertible |

| Source Prompt: "An elk standing and turning its head in a field." | elk -> tiger | elk -> tiger | elk -> tiger | elk -> tiger | elk -> tiger | elk -> tiger | elk -> tiger |

Please scroll right for more comparisons.

2. Compare to Baselines on AnimateDiff

We also compare with several baselines built upon AniamteDiff [7]. The only difference with our method is in motion injection:

- DDIM-Only (i.e., no motion injection): Without motion injection, the target swap cannot align with the motion of the source subject.

- DDIM + Tune-A-Video (i.e., implicit motion injection): Through implicit motion injection by tuning from the source video, the appearance and shape of the source subject will leak into the target swap, degrading the video quality.

- DDIM + T2I-Adapter (i.e., dense motion injection): With a depth map as a condition, the shape of the target subject needs to conform to the source subject and cannot align the non-rigid motion with the source subject.

- Our VideoSwap ---DDIM + Semantic Points (i.e., sparse motion injection): With sparse semantic points as correspondence, the target swap aligns with the motion of the source subject and loosens the shape constraints.

|

|---|

Please scroll right for more comparisons.

| Source Video | VideoSwap (Ours) | DDIM-Only | DDIM + Tune-A-Video | DDIM + T2I-Adapter |

|---|---|---|---|---|

|

|

||||

| Source Prompt: "An airplane flying above the clouds in the sky." | airplane -> V jet | airplane -> V jet | airplane -> V jet | airplane -> V jet |

|

|

||||

| Source Prompt: "A dog sitting on the side of a car window looking out the window." | dog -> V catA | dog -> V catA | dog -> V catA | dog -> V catA |

|

|

||||

| Source Prompt: "A boat is traveling through the water near a rocky shore." | boat -> V sailboat | boat -> V sailboat | boat -> V sailboat | boat -> V sailboat |

|

|

||||

| Source Video: "A monkey sitting on the ground eating something." | monkey -> teddy bear | monkey -> teddy bear | monkey -> teddy bear | monkey -> teddy bear |

Please scroll right for more comparisons.

Ablation Studies

In this section, we provide ablation study results of our method as mentioned in the paper.

Please refer to Sec. 4.3 in the paper for more details.

1. Sparse Motion Feature

To incorporate semantic points as correspondence, we generate sparse motion features by placing the projected DIFT-Embedding in an empty feature. When compared to other point encoding variants, this method yields superior motion alignment and video quality, with the least registration time-cost.

Please scroll right for more comparisons.

| Source Video | DIFT Embedding + MLP (Ours) 100 Iters |

Point Map + T2I-Adapter 100 Iters |

Learnable Embedding + MLP 100 Iters |

Learnable Embedding + MLP 300 Iters |

|---|---|---|---|---|

|

|

||||

| Source Prompt: "A monkey sitting on the ground eating something." | monkey -> tiger | monkey -> tiger | monkey -> tiger | monkey -> tiger |

|

|

||||

| Source Prompt: "A dog sitting on the side of a car window looking out the window." | dog -> V catA | dog -> V catA | dog -> V catA | dog -> V catA |

Please scroll right for more comparisons.

2. Point Patch Loss

To enhance the learning of semantic point correspondence, we limit the computation of diffusion loss to a small patch around each semantic point. This approach prevents the structure of the source subject from leaking into the target swap, eliminating artifacts caused by structure leakage.

| Source Video | w/ Point Patch Loss (Ours) | w/o Point Patch Loss |

|---|---|---|

| Source Prompt: "An elk standing and turning its head in a field." | elk -> tiger | elk -> tiger |

| Source Prompt: "A cat is walking on the floor at a room." | cat -> V dogB | cat -> V dogB |

| Source Prompt: "An airplane flying above the clouds in the sky." | airplane -> helicopter | airplane -> helicopter |

3. Semantic-Enhanced Schedule

To enhance the learning of semantic point correspondence, we prioritize registering semantic points at higher timesteps (i.e., \(t \in [0.5T, T)\)), thereby enhancing semantic point alignment.

| Source Video | Register Semantic Point at \(t \in [0.5T, T)\) (Ours) |

Register Semantic Point at \(t \in [0, T)\) |

|---|---|---|

| Source Prompt: "A dog sitting on the side of a car window looking out the window." | dog -> V catA | dog -> V catA |

| 14-th source frame (semantic point visualization) | Register Semantic Point at \(t \in [0.5T, T)\) (Ours) |

Register Semantic Point at \(t \in [0, T)\) |

|

|

|

| Source Video | Register Semantic Point at \(t \in [0.5T, T)\) (Ours) |

Register Semantic Point at \(t \in [0, T)\) |

| Source Prompt: "A silver jeep driving down a curvy road in the countryside." | silver jeep -> V porsche | silver jeep -> V porsche |

| 14-th source frame (semantic point visualization) | Register Semantic Point at \(t \in [0.5T, T)\) (Ours) |

Register Semantic Point at \(t \in [0, T)\) |

|

|

|

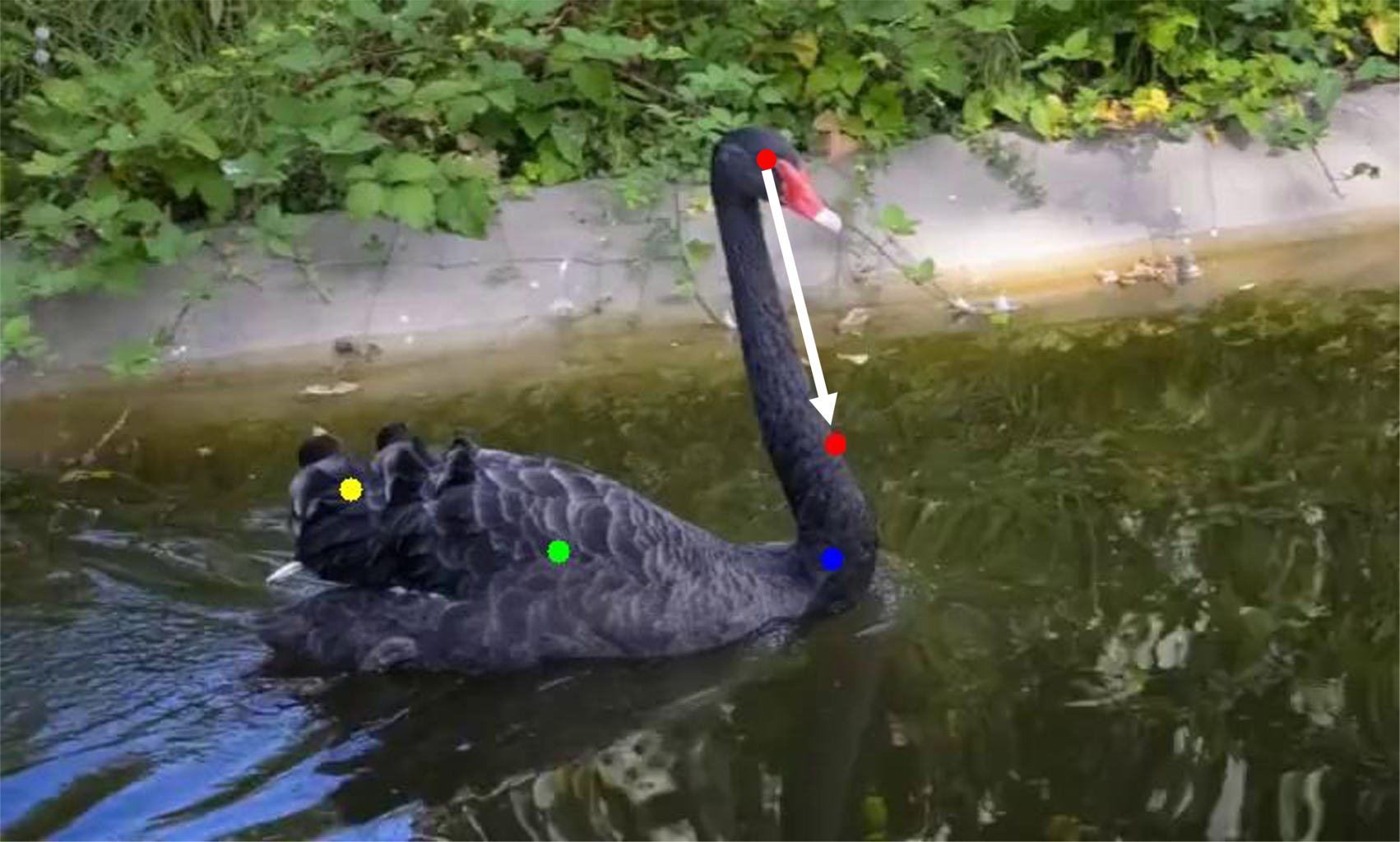

4. Drag-based Point Control

VideoSwap supports dragging point at one keyframe. We propagate the dragged displacement throughout the entire video, resulting in a consistent dragged trajectory. By adopting the dragged trajectory as motion guidance, we can reveal the correct shape of target concept.

|

|---|

Keyframe  Source Prompt: "A black swan swimming in a pond." Target Swap: black swan -> duck |

Source Point Trajectory | Result Guided by Source Point Trajectory |

|---|---|---|

| Dragged Point Trajectory | Result Guided by Dragged Point Trajectory | |

Keyframe  Source Prompt: "A silver jeep driving down a curvy road in the countryside." Target Swap: silver jeep -> V carA |

Source Point Trajectory | Result Guided by Source Point Trajectory |

| Dragged Point Trajectory | Result Guided by Dragged Point Trajectory | |

References

[1] Shuai Yang, Yifan Zhou, Ziwei Liu and Chen Change Loy. Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation. SIGGRAPH Asia, 2023.

[2] Michal Geyer, Omer Bar-Tal, Shai Bagon and Tali Dekel. Tokenflow: Consistent diffusion features for consistent video editing. arXiv preprint arXiv:2307.10373, 2023.

[3] Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan and Humphrey Shi. Text2video-zero: Text-to-image diffusion models are zero-shot video generators. ICCV, 2023.

[4] Wenhao Chai, Xun Guo, Gaoang Wang and Yan Lu. Stablevideo: Text-driven consistency-aware diffusion video editing. ICCV, 2023.

[5] Jay Zhangjie Wu, Yixiao Ge, Xintao Wang, Weixian Lei, Yuchao Gu, Wynne Hsu, Ying Shan, Xiaohu Qie and Mike Zheng Shou. Tune-a-video: One-shot tuning of image diffusion models for text-to-video generation. ICCV, 2023.

[6] Chenyang Qi, Xiaodong Cun, Yong Zhang, Chenyang Lei, Xintao Wang, Ying Shan and Qifeng Chen. FateZero: Fusing Attentions for Zero-shot Text-based Video Editing. ICCV, 2023.

[7] Yuwei Guo, Ceyuan Yang, Anyi Rao, Yaohui Wang, Yu Qiao, Dahua Lin, and Bo Dai. Animatediff: Animate your personalized text-to-image diffusion models without specific tuning. arXiv preprint arXiv:2307.04725, 2023.